相关资料

https://www.ietf.org/rfc/rfc793

https://en.wikipedia.org/wiki/Transmission_Control_Protocol

https://en.wikipedia.org/wiki/Maximum_segment_lifetime

https://en.wikipedia.org/wiki/Silly_window_syndrome

https://en.wikipedia.org/wiki/Nagle%27s_algorithm

https://en.wikipedia.org/wiki/Retransmission_(data_networks)

https://en.wikipedia.org/wiki/TCP_congestion_control

https://en.wikipedia.org/wiki/TCP_delayed_acknowledgment

https://www.cnblogs.com/wuchanming/p/4028341.html

# sysctl -a | grep ipv4.tcp

# sysctl -a | grep core

# man tcp

TCP provides reliable, ordered, and error-checked delivery of a stream of octets between applications running on hosts communicating via an IP network.

总结

序列号:用来解决包的顺序问题。

确认号:用来解决丢包问题。

滑动窗口:实现流量控制。

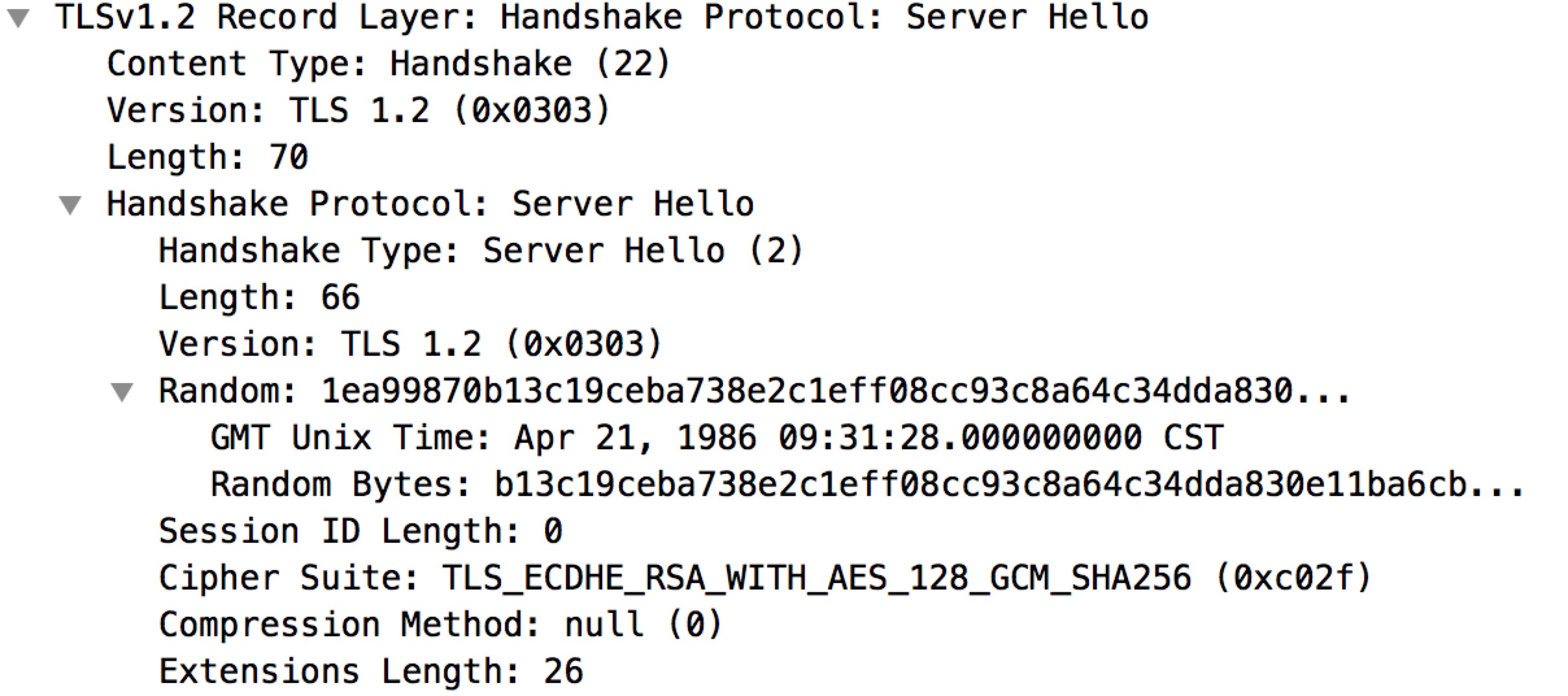

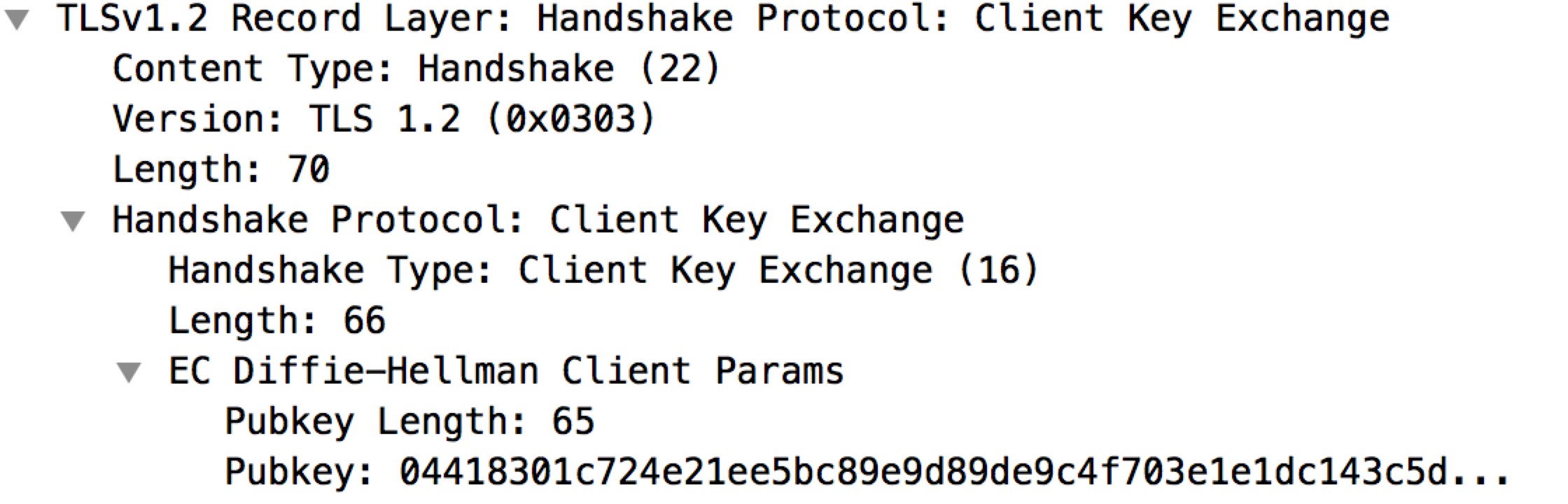

建立连接(Connection establishment)

To establish a connection, TCP uses a three-way handshake.

三次握手。

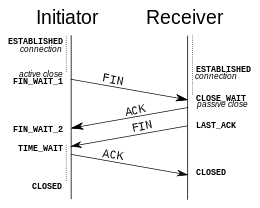

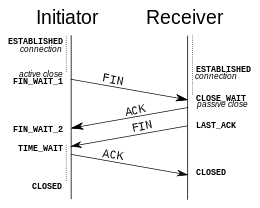

断开连接(connection termination)

The connection termination phase uses a four-way handshake, with each side of the connection terminating independently.

A connection can be "half-open", in which case one side has terminated its end, but the other has not. The side that has terminated can no longer send any data into the connection, but the other side can. The terminating side should continue reading the data until the other side terminates as well.

关闭操作:close、shutdown

https://blog.csdn.net/zhangxiao93/article/details/52078784

MSL

报文段最大生存时间(Maximum segment lifetime)

Maximum segment lifetime is the time a TCP segment can exist in the internetwork system. It is arbitrarily defined to be 2 minutes long.

The Maximum Segment Lifetime value is used to determine the TIME_WAIT interval (2*MSL).

MSS

报文段最大长度(Maximum Segment Size)

The maximum segment size (MSS) is the largest amount of data, specified in bytes, that TCP is willing to receive in a single segment.

ISN

初始序列号(Initial Sequence Number)

The first sequence number used on a connection.

The generator is bound to a 32 bit clock whose low order bit is incremented roughly every 4 microseconds. Thus, the ISN cycles approximately every 4.55 hours.

Since we assume that segments will stay in the network no more than the Maximum Segment Lifetime (MSL) and that the MSL is less than 4.55 hours we can reasonably assume that ISN's will be unique.

RTT

往返时间(Round Trip Time)

Measure the elapsed time between sending a data octet with a particular sequence number and receiving an acknowledgment that covers that sequence number. This measured elapsed time is the Round Trip Time (RTT).

MTU

最大传输单元(Maximum Transmission Unit)

网络层概念(如:IP协议)

In computer networking, the maximum transmission unit (MTU) is the size of the largest network layer protocol data unit that can be communicated in a single network transaction.

Larger MTU is associated with reduced overhead. Smaller values can reduce network delay. In many cases MTU is dependent on underlying network capabilities and must be or should be adjusted manually or automatically so as not to exceed these capabilities.

MTU越大,则通信效率越高而传输延迟增大。

标志(Flags)

SYN: Synchronize sequence numbers. Only the first packet sent from each end should have this flag set.

ACK: indicates that the Acknowledgment field is significant. All packets after the initial SYN packet sent by the client should have this flag set.

PSH: Push function. Asks to push the buffered data to the receiving application.

FIN: No more data from sender.

RST: Reset the connection.

状态

FIN-WAIT-1: represents waiting for an acknowledgment of the connection termination request previously sent.

CLOSE-WAIT: represents waiting for a connection termination request from the local user.

FIN-WAIT-2: represents waiting for a connection termination request from the remote TCP.

LAST-ACK: represents waiting for an acknowledgment of the connection termination request previously sent to the remote TCP.

TIME-WAIT: represents waiting for enough time to pass to be sure the remote TCP received the acknowledgment of its connection termination request.

特征

There are a few key features that set TCP apart from User Datagram Protocol:

1. Ordered data transfer: the destination host rearranges according to sequence number.

2. Retransmission of lost packets: any cumulative stream not acknowledged is retransmitted.

3. Flow control: limits the rate a sender transfers data to guarantee reliable delivery.

4. Congestion control

1. 有序

2. 重传

3. 流量控制

4. 拥塞控制

超时重传

基于超时的重传机制(Timeout-based retransmission)

Whenever a packet is sent, the sender sets a timer that is a conservative estimate of when that packet will be acked. If the sender does not receive an ack by then, it transmits that packet again.

The timer is reset every time the sender receives an acknowledgement.

重传超时(retransmission timeout)

Because of the variability of the networks that compose an internetwork system and the wide range of uses of TCP connections the retransmission timeout must be dynamically determined.

算法:见 rfc793

快速重传

基于重复确认的重传机制(Dupack-based retransmission)

If a single packet (say packet 100) in a stream is lost, then the receiver cannot acknowledge packets above 100 because it uses cumulative ACKs. Hence the receiver acknowledges packet 99 again on the receipt of another data packet.

This duplicate acknowledgement is used as a signal for packet loss. That is, if the sender receives three duplicate acknowledgements, it retransmits the last unacknowledged packet.

A threshold of three is used because the network may reorder packets causing duplicate acknowledgements.

确认机制

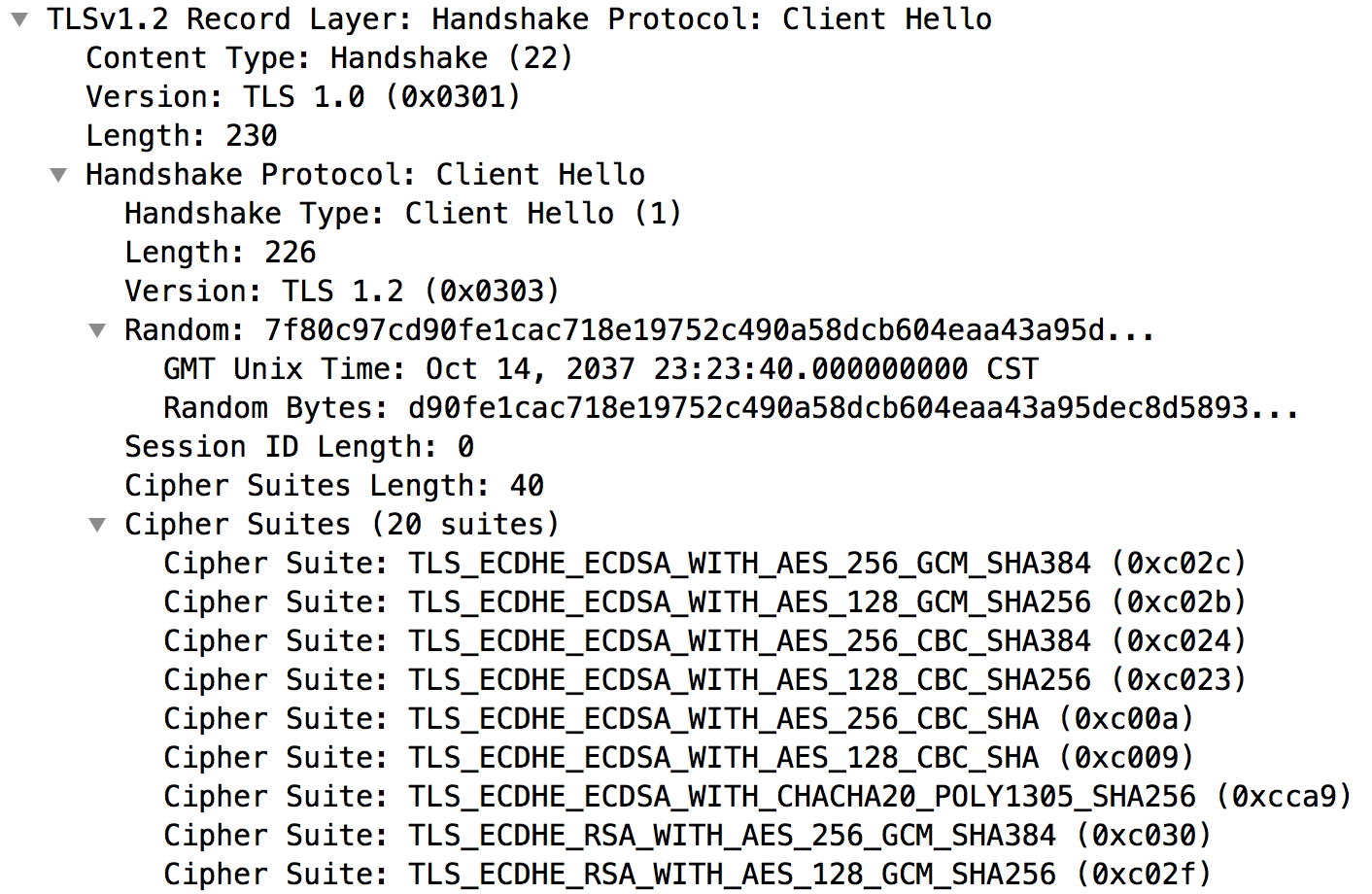

选择确认(Selective Acknowledgment)

Relying purely on the cumulative acknowledgment scheme employed by the original TCP protocol can lead to inefficiencies when packets are lost.

For example, suppose bytes with sequence number 1,000 to 10,999 are sent in 10 different TCP segments of equal size, and the second segment (sequence numbers 2,000 to 2,999) is lost during transmission. In a pure cumulative acknowledgment protocol, the receiver can only send a cumulative ACK value of 2,000 (the sequence number immediately following the last sequence number of the received data) and cannot say that it received bytes 3,000 to 10,999 successfully. Thus the sender may then have to resend all data starting with sequence number 2,000.

To alleviate this issue TCP employs the selective acknowledgment (SACK) option, defined in 1996 in RFC 2018, which allows the receiver to acknowledge discontinuous blocks of packets which were received correctly.

累积确认(Cumulative Acknowledgment)

TCP延迟确认(TCP delayed acknowledgment)

TCP delayed acknowledgment is a technique used by some implementations of the Transmission Control Protocol in an effort to improve network performance.

In essence, several ACK responses may be combined together into a single response, reducing protocol overhead. However, in some circumstances, the technique can reduce application performance.

流量控制(flow control)

TCP uses an end-to-end flow control protocol to avoid having the sender send data too fast for the TCP receiver to receive and process it reliably.

TCP uses a sliding window flow control protocol. In each TCP segment, the receiver specifies in the receive window field the amount of additionally received data (in bytes) that it is willing to buffer for the connection. The sending host can send only up to that amount of data before it must wait for an acknowledgment and window update from the receiving host.

When a receiver advertises a window size of 0, the sender stops sending data and starts the persist timer. The persist timer is used to protect TCP from a deadlock situation that could arise if a subsequent window size update from the receiver is lost, and the sender cannot send more data until receiving a new window size update from the receiver. When the persist timer expires, the TCP sender attempts recovery by sending a small packet so that the receiver responds by sending another acknowledgement containing the new window size.

If a receiver is processing incoming data in small increments, it may repeatedly advertise a small receive window. This is referred to as the silly window syndrome.

TCP 使用端到端的流量控制协议(即滑动窗口协议)来防止发送方发送数据太快导致接收方处理不过来。

TCP 头有一个 window 字段,该字段表示接收方告诉发送方自己还有多少缓冲区可以接收数据,发送方就可以根据该值来发送数据。

当接收窗口变为0的时候,发送方停止发送数据,同时开启一个定时器。定时器的作用是防止死锁,即接收方更新了窗口大小却丢失了,

那么发送方就无法发送数据。当定时器过期时,发送方尝试向接收方发送一个小包数据,接收方进行确认并更新窗口大小。

如果接收方每次只处理少量数据,导致每次告诉发送方的都是很小的窗口大小,这就是“糊涂窗口综合症”。

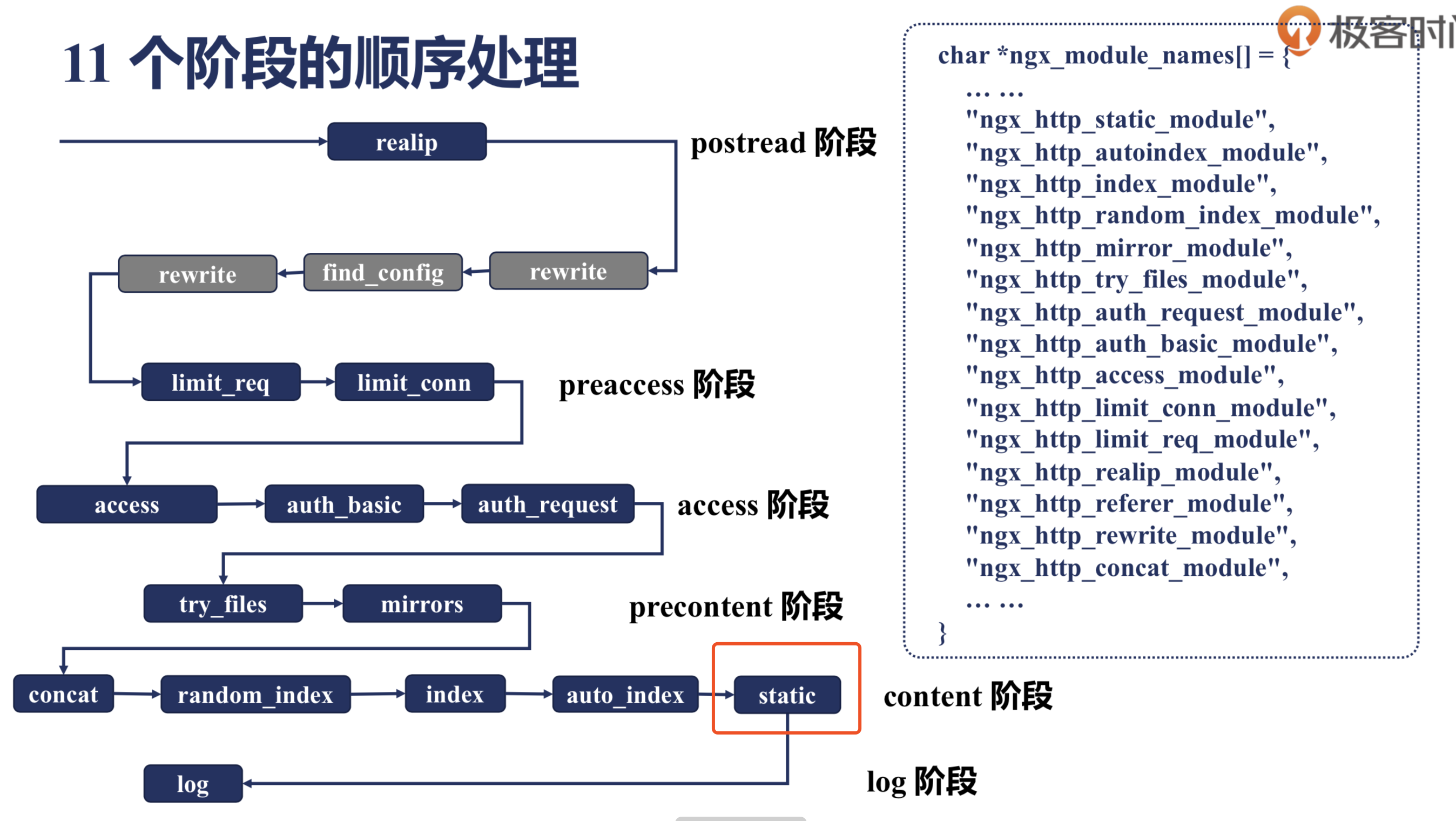

滑动窗口协议(sliding window protocol)

发送已确认

发送未确认

未发送

未发送且对端不允许发送

糊涂窗口综合症(silly window syndrome)

A serious problem can arise in the sliding window operation when the sending application program creates data slowly, the receiving application program consumes data slowly, or both. If a server with this problem is unable to process all incoming data, it requests that its clients reduce the amount of data they send at a time. If the server continues to be unable to process all incoming data, the window becomes smaller and smaller, sometimes to the point that the data transmitted is smaller than the packet header, making data transmission extremely inefficient.

When the silly window syndrome is created by the sender, Nagle's algorithm is used. Nagle's solution requires that the sender send the first segment even if it is a small one, then that it wait until an ACK is received or a maximum sized segment (MSS) is accumulated.

When the silly window syndrome is created by the receiver, David D Clark's solution is used.[citation needed] Clark's solution closes the window until another segment of maximum segment size (MSS) can be received or the buffer is half empty.

如果“糊涂窗口综合症”是由发送方引起的,那么就采用 Nagle 算法。先发送一个小包数据,等待确认或者数据积累到一定量(MSS)后再发送。

如果“糊涂窗口综合症”是由接收方引起的,那么就采用 David D Clark 方案,关闭窗口直到缓冲区半空或者能够接收一个 MSS 报文段再打开窗口。

Nagle算法

Nagle's algorithm is a means of improving the efficiency of TCP/IP networks by reducing the number of packets that need to be sent over the network.

The RFC describes what he called the "small-packet problem", where an application repeatedly emits data in small chunks, frequently only 1 byte in size. Since TCP packets have a 40-byte header (20 bytes for TCP, 20 bytes for IPv4), this results in a 41-byte packet for 1 byte of useful information, a huge overhead.

Nagle's algorithm works by combining a number of small outgoing messages and sending them all at once. Specifically, as long as there is a sent packet for which the sender has received no acknowledgment, the sender should keep buffering its output until it has a full packet's worth of output, thus allowing output to be sent all at once.

Nagle 算法主要是积累数据,然后一次发送。只要有一个已被发送的包还没有被确认,发送方就应该把数据放到缓冲区里,直到达到一个 MSS 报文段再发送。

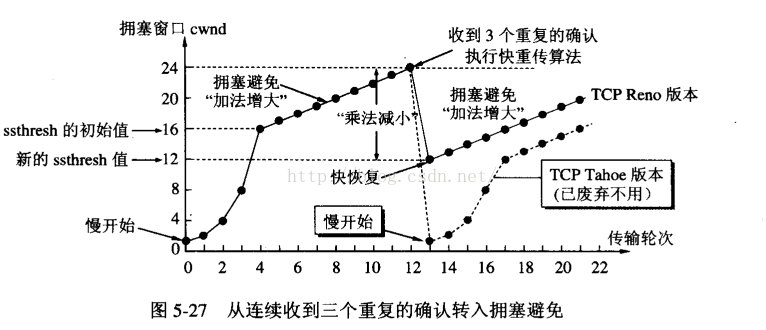

拥塞控制(congestion control)

The final main aspect of TCP is congestion control. TCP uses a number of mechanisms to achieve high performance and avoid congestion collapse, where network performance can fall by several orders of magnitude. These mechanisms control the rate of data entering the network, keeping the data flow below a rate that would trigger collapse.

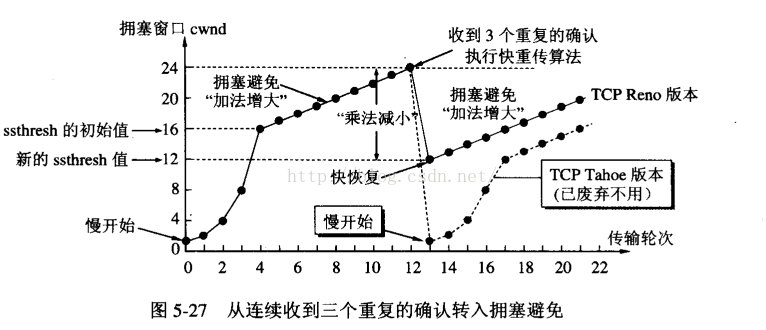

Modern implementations of TCP contain four intertwined algorithms: slow-start, congestion avoidance, fast retransmit, and fast recovery.

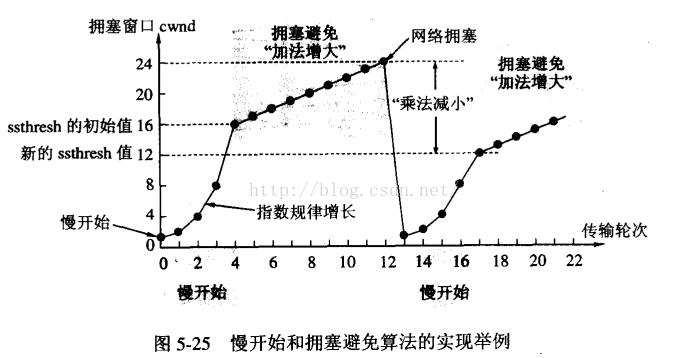

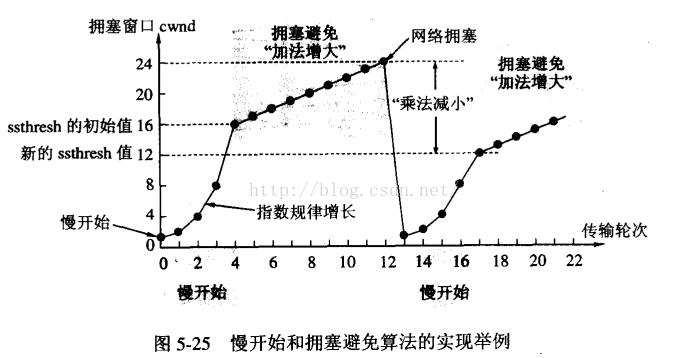

慢启动(slow-start)

Slow-start is used in conjunction with other algorithms to avoid sending more data than the network is capable of transmitting, that is, to avoid causing network congestion.

Slow start begins initially with a congestion window size (CWND) of 1, 2, 4 or 10 MSS. The value for the congestion window size will be increased by one with each acknowledgement (ACK) received, effectively doubling the window size each round-trip time.[c] The transmission rate will be increased by the slow-start algorithm until either a loss is detected, or the receiver's advertised window (rwnd) is the limiting factor, or ssthresh is reached.

拥塞避免(congestion avoidance)

Once ssthresh is reached, TCP changes from slow-start algorithm to the linear growth (congestion avoidance) algorithm. At this point, the window is increased by 1 segment for each round-trip delay time (RTT).

快速恢复(fast recovery)

When a loss occurs, a fast retransmit is sent, half of the current CWND is saved as ssthresh and as new CWND, thus skipping slow start and going directly to the congestion avoidance algorithm. The overall algorithm here is called fast recovery.

快速重传(fast retransmit)

Fast retransmit is an enhancement to TCP that reduces the time a sender waits before retransmitting a lost segment.

A TCP sender normally uses a simple timer to recognize lost segments. If an acknowledgement is not received for a particular segment within a specified time, the sender will assume the segment was lost in the network, and will retransmit the segment.

Duplicate acknowledgement is the basis for the fast retransmit mechanism. Duplicate acknowledgement occurs when the sender receives more than one acknowledgement with the same sequence number.

When a sender receives several duplicate acknowledgements, it can be reasonably confident that the segment with the next higher sequence number was dropped. A sender with fast retransmit will then retransmit this packet immediately without waiting for its timeout.

Reno 算法:

先采用慢启动算法,达到 ssthresh 后切换到拥塞避免算法,当检测到包丢失后,采用快速重传机制,然后使用快速恢复算法。

# sysctl net.ipv4.tcp_available_congestion_control // 可用的拥塞控制算法

# sysctl net.ipv4.tcp_allowed_congestion_control // 允许使用的拥塞控制算法

# sysctl net.ipv4.tcp_congestion_control // 默认的拥塞控制算法

默认的拥塞控制算法:cubic

内核参数

net.ipv4.tcp_rmem

接收缓存(自动调整)。

This is a vector of 3 integers: [min, default, max]. These parameters are used by TCP to regulate receive buffer sizes.

TCP dynamically adjusts the size of the receive buffer from the defaults listed below, in the range of these values, depending on memory available in the system.

net.ipv4.tcp_wmem

发送缓存(自动调整)。

This is a vector of 3 integers: [min, default, max]. These parameters are used by TCP to regulate send buffer sizes.

TCP dynamically adjusts the size of the send buffer from the default values listed below, in the range of these values, depending on memory available.

net.ipv4.tcp_keepalive_time

TCP发送keepalive探测消息的时间间隔(默认:7200 单位:秒)。

The number of seconds a connection needs to be idle before TCP begins sending out keep-alive probes.

net.ipv4.tcp_keepalive_intvl

探测消息未响应,重发该消息的时间间隔(默认:75 单位:秒)。

The number of seconds between TCP keep-alive probes.

net.ipv4.tcp_keepalive_probes

在确认TCP连接断开之前,最多发送多少个keepalive探测消息(默认:9)

The maximum number of TCP keep-alive probes to send before giving up and killing the connection if no response is obtained from the other end.

net.ipv4.tcp_fin_timeout

对于本端断开的套接字连接,TCP保持在FIN-WAIT-2状态的时间(秒)。

This specifies how many seconds to wait for a final FIN packet before the socket is forcibly closed.

net.ipv4.tcp_max_tw_buckets

同时保持 TIME-WAIT 状态的套接字的最大数量。

net.ipv4.tcp_max_syn_backlog

SYN 队列的最大长度。

net.ipv4.tcp_syncookies

当 SYN 队列溢出时,启用 cookies 处理,用以防范SYN Flood攻击(半连接攻击)。

net.ipv4.tcp_sack

启用选择确认机制。

net.ipv4.tcp_window_scaling

启用窗口缩放(window scaling)。

net.ipv4.tcp_syn_retries

主动新建连接时,内核要重试多少次 SYN 请求后才决定放弃。

The maximum number of times initial SYNs for an active TCP connection attempt will be retransmitted.

net.ipv4.tcp_synack_retries

收到 SYN 请求后,内核要重试多少次 ACK 确认才决定放弃。

The maximum number of times a SYN/ACK segment for a passive TCP connection will be retransmitted.

net.ipv4.tcp_retries2

对于已建立的 TCP 连接,TCP 包要重传多少次才决定放弃。

The maximum number of times a TCP packet is retransmitted in established state before giving up.

net.core.somaxconn

系统中每一个端口的监听队列的最大长度。

net.core.optmem_max

每个套接字所允许的最大缓冲区的大小。